Attending my first conference! ACL 2020 from an undergrad's perspective

| Created | |

|---|---|

| Tags | ConferencesNLPResearch |

For non-NLP people reading this: ACL is the biggest conference for Natural Language Processing and has been growing rapidly the last years. Numerous top researchers and companies (Google, DeepMind, ...) attend to present their work.

This blog post is a (mostly) unfiltered collections of impressions of ACL 2020. For a more condensed, research-focused article, I can point to Vered Shwartz' Highlights of ACL 2020; or for a discussion on the virtual format to Yoav Goldberg's The missing pieces in virtual-ACL.

The post focuses on how it was to navigate an online conference for someone who is quite new to research. On the content side of ACL, I will mostly discuss the topic of Grounded Language.

So just four days before ACL 2020 was going to to start, I was in the middle of finalizing a paper submission to COLING and handing in my Bachelor thesis. So I had given no thought to ACL at all. I was not presenting any paper at ACL, it costs 125$ and I didn't have a clear objective of what to attend. So why attending? Luckily a colleague convinced me to sign up and I do not regret it! After all, I am seriously considering a PhD in this field and I also want to get to know people from academia and industry.

This was my very first conference. It would have been more personal and less overwhelming in person, but also: I couldn't have attended in person in Seattle.

Saturday

I got my password and I am now ready to go! It is all a bit overwhelming. Talks, QAs, Tutorials, so much to choose from and ways to make good or bad first impressions.

The first step is to make a schedule for next week, and trying to keep it light (since I am handing in my thesis in a few days). Do not fall into FOMO (Fear of missing out)!

I need to remind myself to stay humble and curious, instead of shy and intimidated by all the experts. Go out, understand, collaborate. That's all.

So to not get overwhelmed, I defined what I want to get out of the conference:

- looking for places to do a PhD or Master's, or also shorter research projects

- Get a solid overview of the current research and labs in the field of Grounded Language. This is the field I want to ultimately do my PhD in (not 100% sure yet). There is a ACL track dedicated to this called Language Grounding to Vision, Robotics and more.

- Broad overview of what Grounded Language is? Cognitive, Deep Learning, Robotics?

- Who are the big labs, professors, people? Where can I gain experience first?

- industry connections

- socialize with people and look at some random papers or papers from people I know

I attended the pre-social organized by Esther Seyffahrt in the evening where people read from their hilariously mis-transcribed talks. The irony here is of course that we are NLP-people with expert knowledge on transcription.

There is the Multi-Modal Dialogue Tutorial I consider. It is tagged as "cutting-edge" in contrast to the other category "introductory", so I hope I can take something away from it.

Sunday

I decided to not attend any tutorial, since I wanted to spend more time on figuring out the content I want to attend the next days and listen to the Keynote speech from Prof. Kathleen R. McKeown. The speech set the right tone for me as I was just getting overwhelmed by all the technical content. It provided historical context and humbled me as being part of a long and exciting community.

In the afternoon I joined the Undergrad Panel Discussion. It was chaotic. ~80 people attended. Researchers introduced themselves and their academic history, gave advice.

I also talked to Cornellians on Rocket Chat: Yoav Artzi and Alane Suhr. Yoav's group is definitely an option for a PhD so I was happy to get some first impressions.

Also Sabrina Mielke gave me a lot of cool tips for applying for a PhD in the U.S.

It starts to feel a bit like a social event also finally.

Monday

I had to write on my thesis a lot but definitely wanted to attend the QA on What is Learned in Visually Grounded Neural Syntax Acquisition by Noriyuki Kojima et al. as it is coming from Yoav Artzi's lab. I prepared for it by watching the video, skimming the paper and writing down questions. The main question that was answered for me during the QA was why it would make sense to use grounding for syntactic parsing at all. Example answer from Yoav Artzi: "Eating pizza with anchovis" → "Eating (pizza with anchovis)" or "(Eating pizza) with anchovis". A picture showing the pizza with anchovis on top might help choosing the correct one:

I also talked to some people privately on Rocket Chat about their PhD experience at Cornell or about possible research projects I could get involved with.

Later that day, I was watching the video on Integrating Multimodal Information in Large Pretrained Transformers and then joining the QA afterwards. The QA was fun, I mostly learnt about how the dataset looks like. It is about capturing sentiment with visual and acoustic modalities, next to language. The visual & acoustic time steps are aligned with the words directly. The visual & acoustic features are put into the model somewhere in the middle through "Attention Gating", which I sort of understood (It would have been so much easier and intuitive to grasp the concept in a physical conference setting, with him pointing at sutff and using gestures to explain!)

At conferences there are Birds of a Feather (BoF) events where people just casually chat and socialize. So I joined the BoF chat for Grounded Language. Soon researchers started discussing what Language Grounding actually is and it was fascinating how vague and uncertain everyone was. For me personally it boils down to what they describe in Experience Grounds Language, a paper that originally drew me into this research direction.

So in the evening I joined the BoF for Language Grounding zoom call and the conversation-starter questions that the organizers prepared were great:

- What was the first project you have pursued in this area?

- What papers should I read to have a good initial understanding of the field?

- What resources do you think are best to develop a good understanding of this area?

- What groups and/or projects would you recommend to follow to keep up with the state-of-the-art in this area?

- What advice would you give to someone interested in starting research in this area?

- What are your ongoing projects in this area?

However after reading these questions again I realized that few were actually addressed answered. These were the kinds of questions I would have had liked answers to! Great controversial question from Yonatan Bisk: Why are we at a language conference if we do multi-modal? What can we bring to the table that CV/robotics doesn't?

There were many people at the social I wasn't aware of as Language Grounding Researchers. So it was a good way to enlarge my list of labs to follow and potential places to apply to!

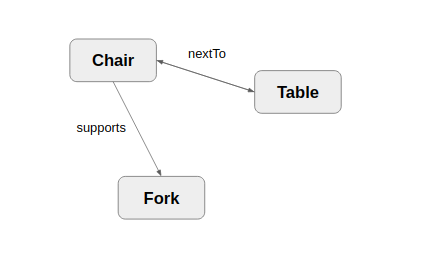

Desmond Elliott thought about: How do you fundamentally squeeze the auxiliary info into your primary language model? An important keyword I picked up: Scene Graphs, an interesting data structure for Computer Vision with semantics. A triple in this graph could be Supports(table, fork):

Tuesday

I spontaneously attended the DeepMind social hosted by e.g. Sebastian Ruder and asked questions like "Is language fundamental to Intelligence?". I got some good answers.

I also briefly joined Tal Linzen's QA for his theme paper How Can We Accelerate Progress Towards Human-like Linguistic that argues for new methods of evaluation. He publicly announced a PhD position at his lab and I can see myself applying. So it was definitely worth looking into it and getting to know his way of thinking.

Today was another BoF social for Language Grounding people! More than 100 people attended and it was cool to see and hear so many researchers I follow on Twitter; and also to hear from so many I haven't heard before.

As a inspiration to work on Grounded Language, someone in the social pointed to the last minutes of a debate between Chris Manning & Yann Le Cun in 2018 (I watched but have to say that they don't really touch on Grounded Language). Then people started naming their favourite multi-modal tasks: commonsense & pragmatics, teaching robots by demonstration to use language, Figuring out language acquisition in children...

For commonsense & pragmatics someone pointed to Raquel Fernandez' (University of Amsterdam) PhotoBook dataset, which is inspired by Tangram experiments. Another good pointer was the ALFRED benchmark. There were many research and researchers that I could mention here and hopefully I will find the time to look into them.

Another pressing issue (especially for roboticists) seemed to be the role of simulations. It was frowned upon in the past but now more and more people turn to them.

Then I posed a question that was received well:

As we are talking about "real" roboticists: We are language people talking about Grounding/Multi-Modal at a language conference. Do these conversations also happen at CV/Robotics conferences? How do their conversations and persepctives differ? Are we fundamentally the same community divided in several parts?

Others point out that the are definitely different communities, e.g. roboticists do not consider or appreciate the complexities of language. However there seems to be more convergence between the fields now.

I also had some very general thoughts on it:

You can probably "solve" robotics & vision without language. The best example are animals. But you probably can't "solve" language without some grounding. Therefore, when we get fundamentally stuck with solving language we have to turn to vision or robotics. But vision and robotics do usually not have to turn to language when they get stuck.

Raymond Mooney also pointed to probably one of the very few classes entirely dedicated to Grounded NLP, taught by himself: https://www.cs.utexas.edu/~mooney/gnlp/

Wednesday

I spent this day mostly on other things like my thesis and only did two small things at ACL:

First, I attended Alessandro Suglia's QA on the paper CompGuessWhat?!: A Multi-task Evaluation Framework for Grounded Language Learning. This was also interesting from a personal persective as we talked about the integrated Master's + PhD program of University of Edinburgh and Heriot Watt University.

Around noon I had a 15 minute conversation with Tal Linzen about a PhD position at his lab. This was quite fruitful and I can definitely see myself working under his supervision. I would've hoped for even more opportunities like this (personal conversations with professors or industry people) but unfortunately there weren't too many. Maybe I also have to be bolder next time.

Thursday & Friday

These two days were Workshop day and I was looking forward to them! However I ended up only attending half of the Thursday workshop and no Friday workshop as I handed in my thesis on Friday.

For Thursday I had chosen to attend ALVR (Advances in Language and Vision Research) and for Friday it would've been Challenge-HML (The Second Grand-Challenge and Workshop on Human Multimodal Language).

Impressions from ALVR

The Opening Words were not quite as inspirational as the ones from the main conference and instead focused on some conference statistics and outline of the workshop structure.

What followed were talks about on-going research. After that I could have also stayed longer for submissions to two challenges on Video-Guided Machine Translation & Remote Object Grounding (REVERIE).

The first talk was by Angel Chang from Simon Fraser University (Vancouver) on creating 3D worlds from text. For example one might want to describe a room which is then displayed by a 3D design software. A useful way to think about it is: Language as a common sense constraint on 3D-scene-graphs (e.g. posters usually go on walls). Angel Chang has put special effort into getting real 3D-data from scanning real rooms instead of relying on human-made models.

Following this, we heard great talks from e.g. Lucia Specia or Yoav Artzi but I most enjoyed the ones from Louis-Philippe Morency and Mark Riedl. The main reason for this was that I was exhausted from writing my thesis and their talks were the most engaging and funny.

Morency talked about how Multi-Modal systems and how they can be applied to study human behaviour. He highlighted the application of helping people with mental diseases. In the second half of his talk he described five core challenges in multi-modal learning, drawing from Multimodal Machine Learning: A Survey and Taxonomy which he co-authored.

The last two things I watched before "leaving" the conference were Mark Riedl's talk Dungeons and DQNs: Grounding Language in Shared Experience and Climbing towards NLU: On Meaning, Form, and Understanding in the Age of Data (Bender & Koller), which won the Best Theme Paper Award at ACL 2020. These two were ideal picks to end the conference for me: The former because it was so genuinely funny and well-prepared and the latter because it expressed what many people at ACL thought was lacking in our current systems.

The ALVR talks can also be found here.

Takeaways

I "got started with research" this winter, just before Corona. Therefore, I didn't have many chances to connect with professors, PhD students or any other enthusiasts at meetups, talks or reading clubs... Luckily I decided to attend ACL 2020. It was not ideal but it was a good replacement for what I had missed the last months. I got inspired to follow my research dreams and I also realized that social context always helps to orientate for what's relevant and impactful: You can take quite misleading paths when there is no guidance in the form of more experienced people talking about what they care about.

The conference also helped me a lot for my decisions to apply to PhD programs.

But still, it's not the real thing. Especially when you don't know anyone at the conference in person and have to figure out how things work. I believe I was more comfortable than many others in asking dumb questions and interacting with the presenters. But I could've done it even more.

Content-wise I am even more certain that Grounded Language is the way to go for me. Regarding NLP more general, people seem to recognize that SOTA is not everything and we should instead take a step back and reflect on what we are trying to accomplish and what our data looks like. This shift is reflected in the Best Paper Awards for Beyond Accuracy: Behavioral Testing of NLP Models with CheckList (Ribeiro et al.) and Climbing towards NLU: On Meaning, Form, and Understanding in the Age of Data (Bender and Koller).